The revolution derived from the rise and spread of computers posed a before and after, standing out in the dizzying

The root of this development was the born of the CPU. As we well know, these are general-purpose devices, designed to execute sequential code. However, in recent years, high computational cost applications emerged, which generated the need for specific hardware architectures.

One solution consisted of creating graphics processors (GPUs), which were introduced in the 1980s to free CPUs from demanding tasks, first with the handling of 2D pixels, and then with the rendering of 3D scenes, which involved a great increase in their computing power. The current architecture of hundreds of specialized parallel processors results in high efficiency for executing operations, which led to their use as general-purpose accelerators. However, if the problem to be solved is not perfectly adapted, development complexity increases, and performance decreases.

What are FPGAs?

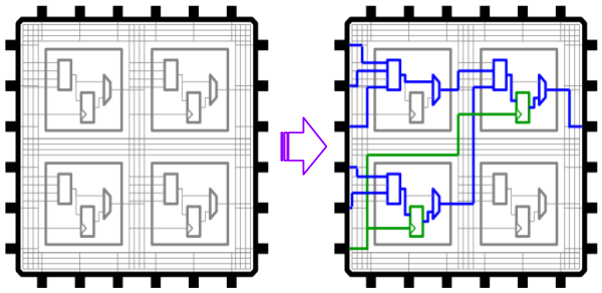

Both CPUs and GPUs have fixed hardware architecture. The alternative with reconfigurable architecture, are the devices known as Field-programmable Gate Array (FPGA). They are made up of logical blocks (they solve simple functions and, optionally, save the result in a register) and a powerful interconnection matrix. They can be considered integrated blank circuits, with a great capacity for parallelism, which can be adapted to solve specific tasks in a performative way.

What are they for us?

The general concept behind the use of this technology is that a complex computationally demanding algorithm moves from an application running on the CPU to an accelerator implemented on the FPGA. When the application requires an accelerated task, the CPU transmits the data and continues with its tasks, the FPGA processes them and returns them for later use, freeing the CPU from the said task and executing it in less time.

The acceleration factor to obtain will depend on the algorithm, the amount and type of data. It can be expected from a few times to thousands, that in processes that take days to compute translates it down to hours or minutes. This is not only an improvement in the user experience but also a decrease in energy and infrastructure costs.

While any complex and demanding algorithm is potentially accelerable, there are typical use cases, such as:

Deep / Machine Learning and Artificial Intelligence in general

Predictive Analysis type applications (eg Advanced fraud detection), Classification (eg New customer segmentation, Automatic document classification), Recommendation Engines (eg Personalized Marketing), etc. Accelerated frameworks and libraries are available such as TensorFlow, Apache Spark ML, Keras, Scikit-learn, Caffe, and XG Boost.

Financial Model Analysis

Used in Banks, Fintech, Insurance, for example, to detect fraudulent transactions in real-time. Also for Risk Analysis (with CPU, banks can only perform risk models once a day, but with FPGAs, they can perform this analysis in real-time). In finance, algorithms such as the accelerated Monte Carlo are used, which estimates the variation over time of stock instruments.

Computer vision

Interpretation of Medical or Satellite Images, etc. with accelerated OpenCV algorithms.

Video processing in real-time: Used in all kinds of automotive applications, Retail (Analytics in Activity in Stores), Health, etc. using OpenCV accelerated algorithms and FFmpeg tools.

Big data

Real-time analysis of large volumes of data, for example, coming from IoT devices via Apache Spark.

Data centers

Hybrid Data Centers, with different types of calculations for different types of tasks (CPU, GPU, FPGA, ASIC).

Security

Cryptography, Compression, Audit of networks in real-time, etc.

Typically, accelerators are invoked from C / C ++ APIs, but languages such as Java, Python, Scala or R can also be used and distributed frameworks such as Apache Spark ML and Apache Mahout

Some disadvantages

Not all processes can be accelerated. Similar to what happens with GPUs, applying this technology erroneously entails slow execution, due to the penalty for moving data between devices.

In addition, it must be taken into account that a server infrastructure with higher requirements is required for its development and use. Finally, to achieve a high-quality final product, highly specialized know-how is needed, which is scarce in today’s market.

Summary

In recent years, FPGA technology has approached the world of software development thanks to accelerator boards and cloud services. The reasons for using them range from achieving times that are not otherwise met or improving the user experience, to reducing energy and accommodation costs.

Any software that involves complex algorithms and large amounts of data is a candidate for hardware acceleration. Some typical use cases include, but are not limited to, genomic research, financial model analysis, augmented reality and machine vision systems, big data processing, and computer network security.